UX

prototyping

research

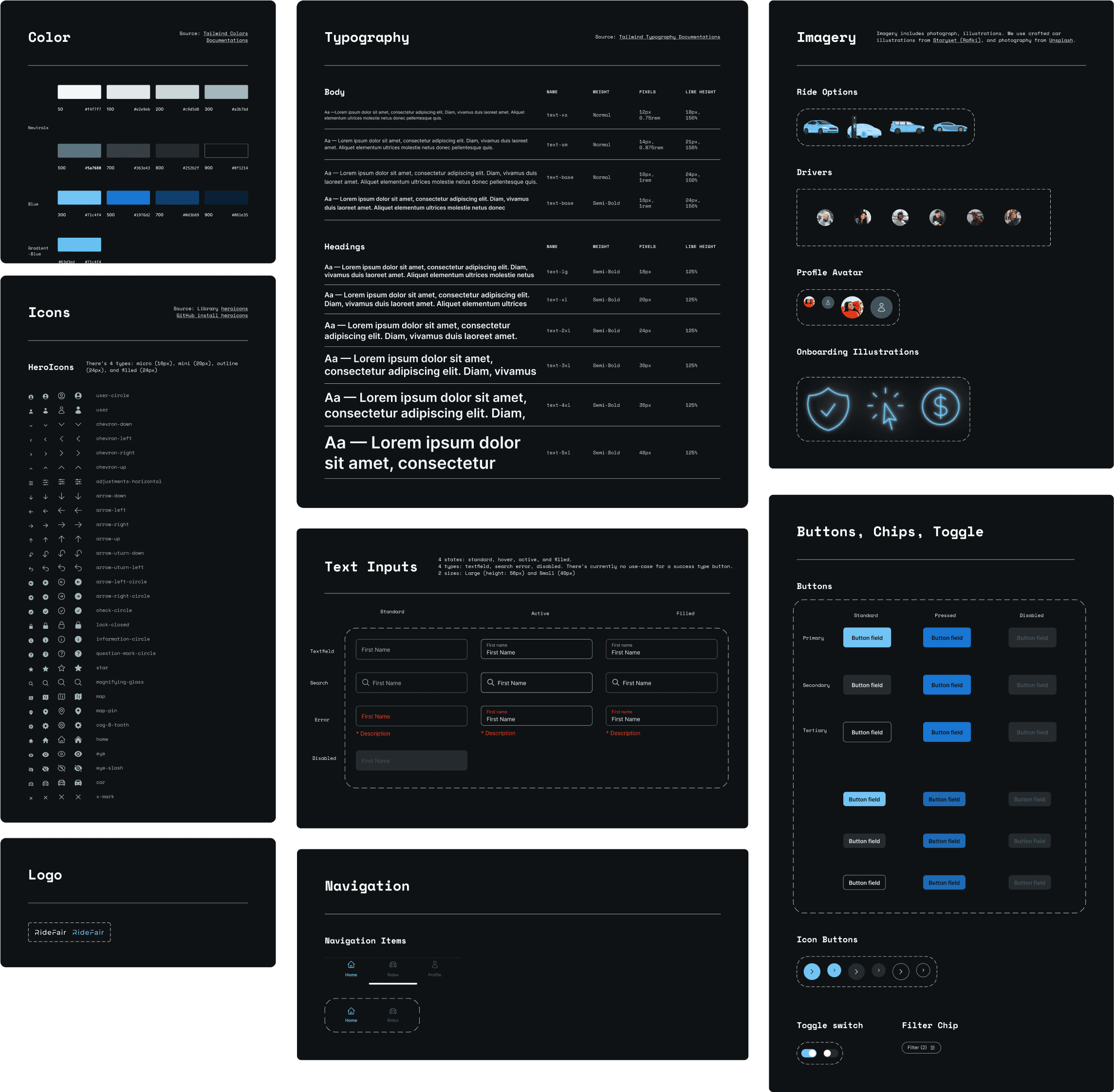

design system

visual design

Problem

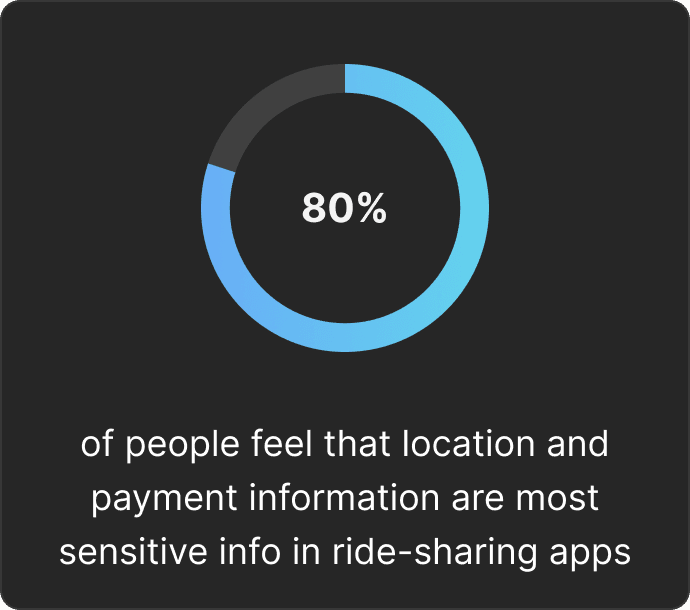

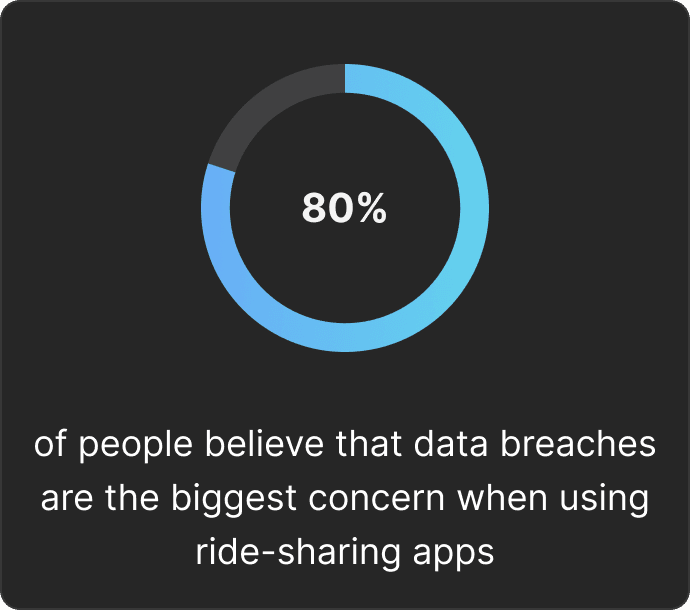

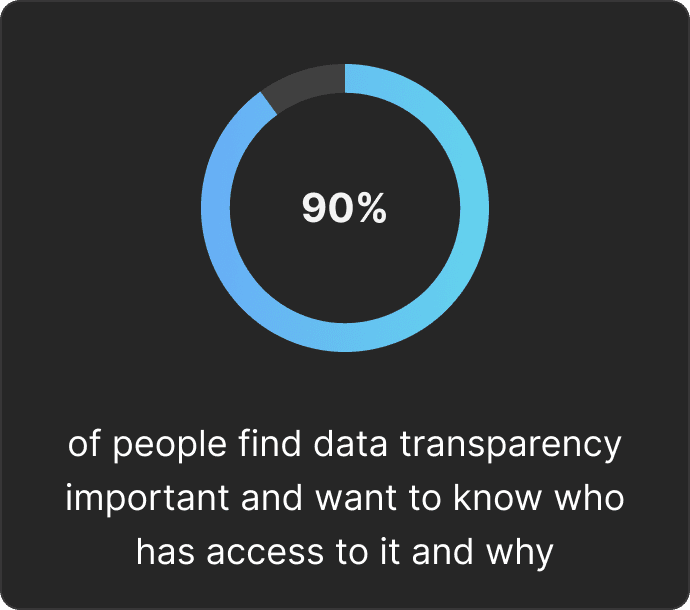

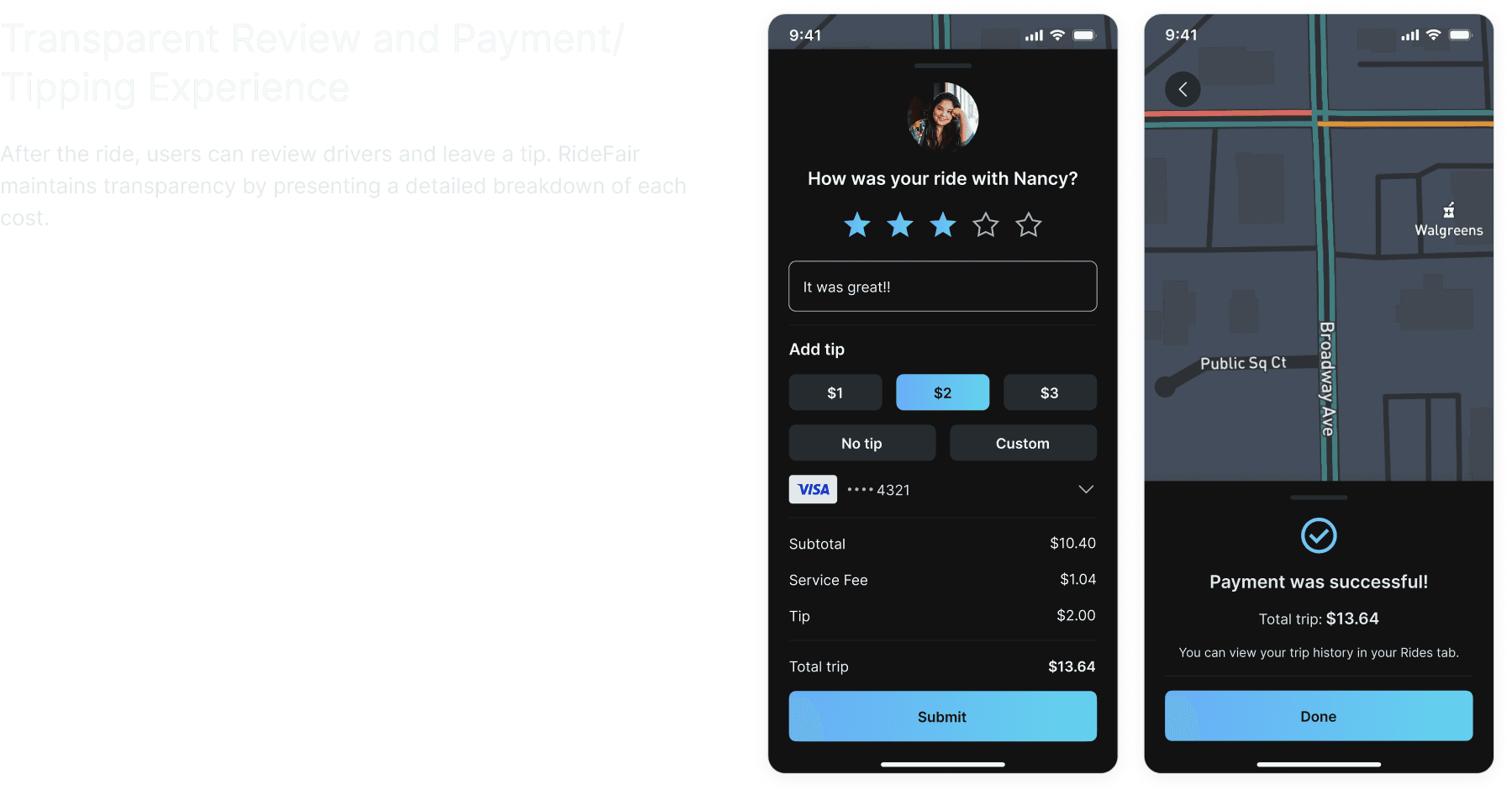

Currently, web2 ride-sharing companies are harvesting user data and potentially selling it to third parties which make users targets for data breaches, hacking, ads, and more.

How might we develop a ride-sharing application that empowers users to maintain control over their personal data, ensuring a secure and confident travel experience?

Discover and Define

The travel & transport industry is one of the least trusted industries regarding sharing data (BCG 2022). Knowing that users are most concerned about the misuse of their data by products in the travel and transportation industry, we wanted to tackle this problem space.

Figma

Maze

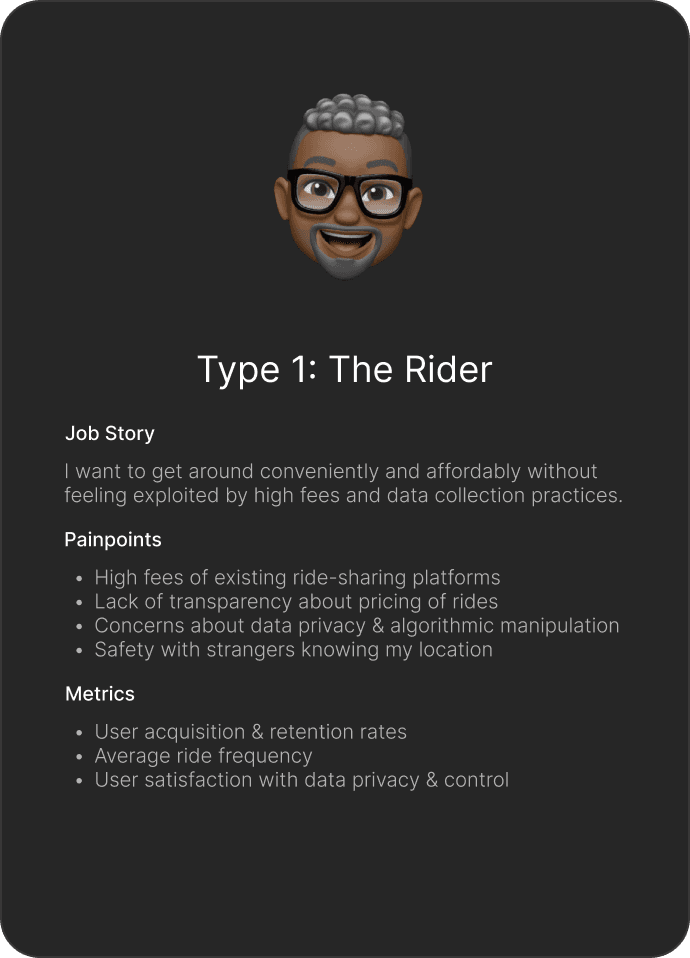

Defining 2 User Types: Rider and Driver

While our primary focus for the MVP app was centered on the rider’s perspective, we wanted to acknowledge the driver’s pain points and goals. We wanted to include the drivers as a key stakeholder group that we would need to develop and cater to in future iterations.

User Journey Mapping

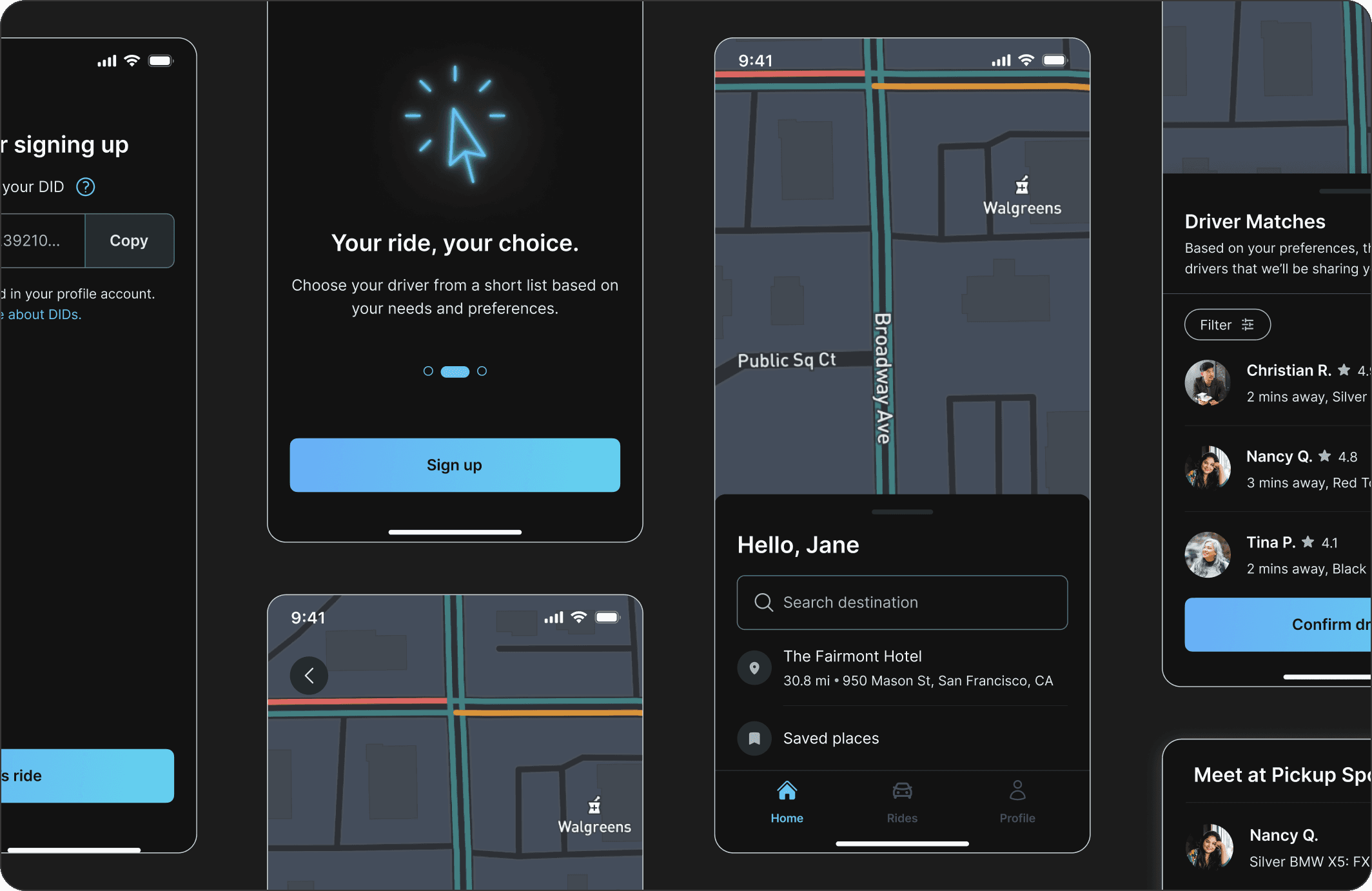

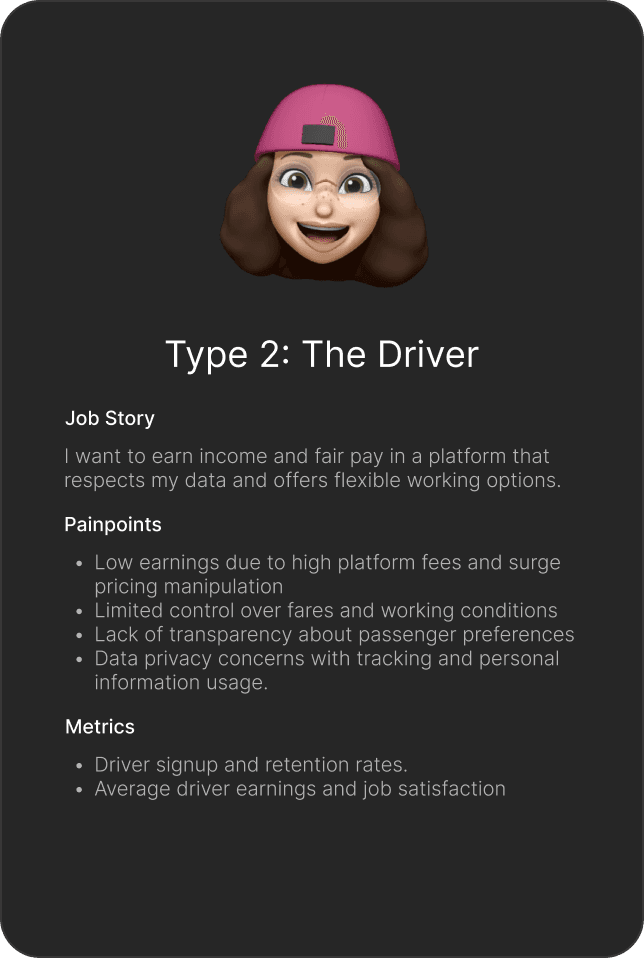

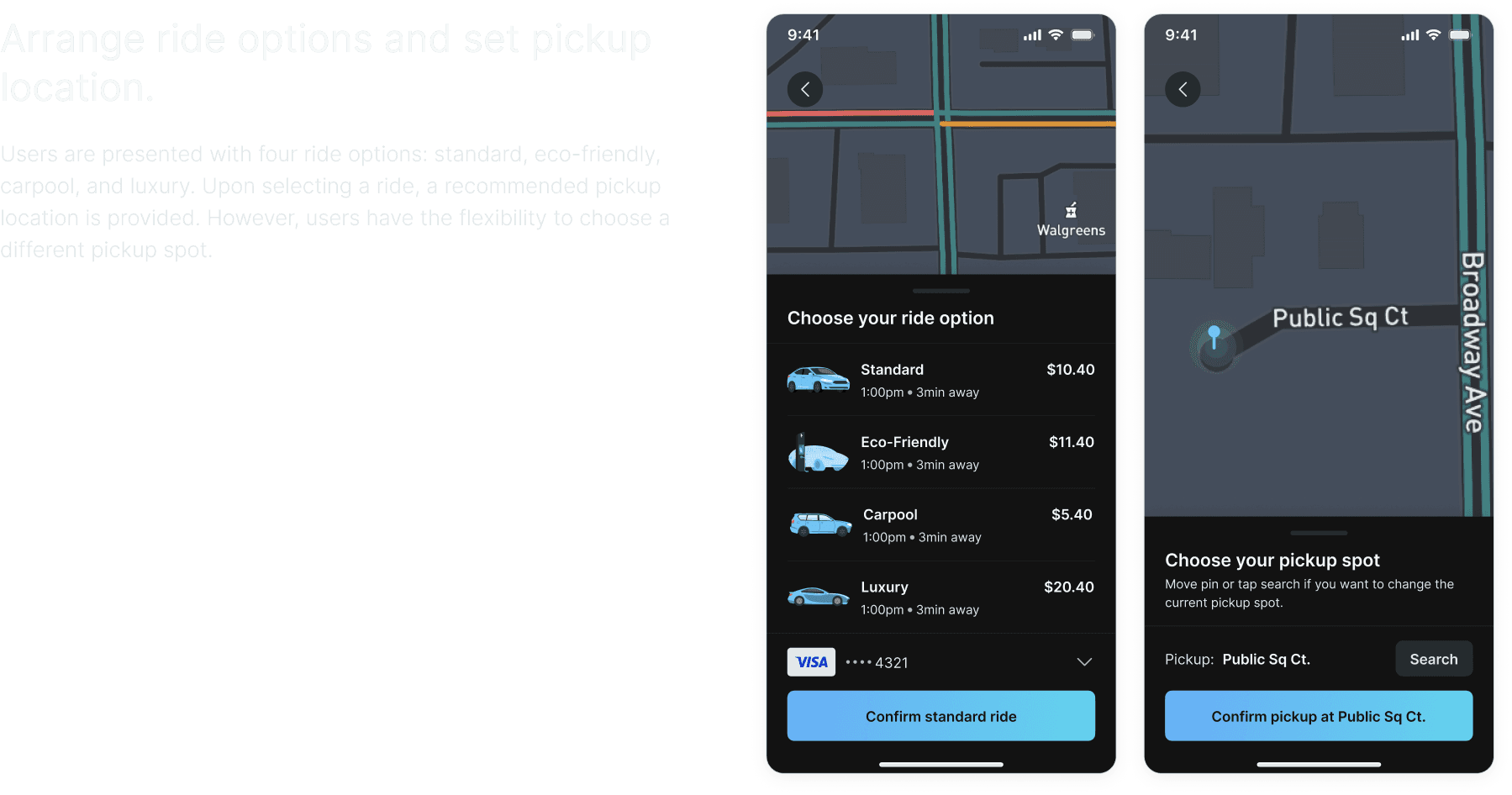

We determined two major pain points in the user journey to focus on.

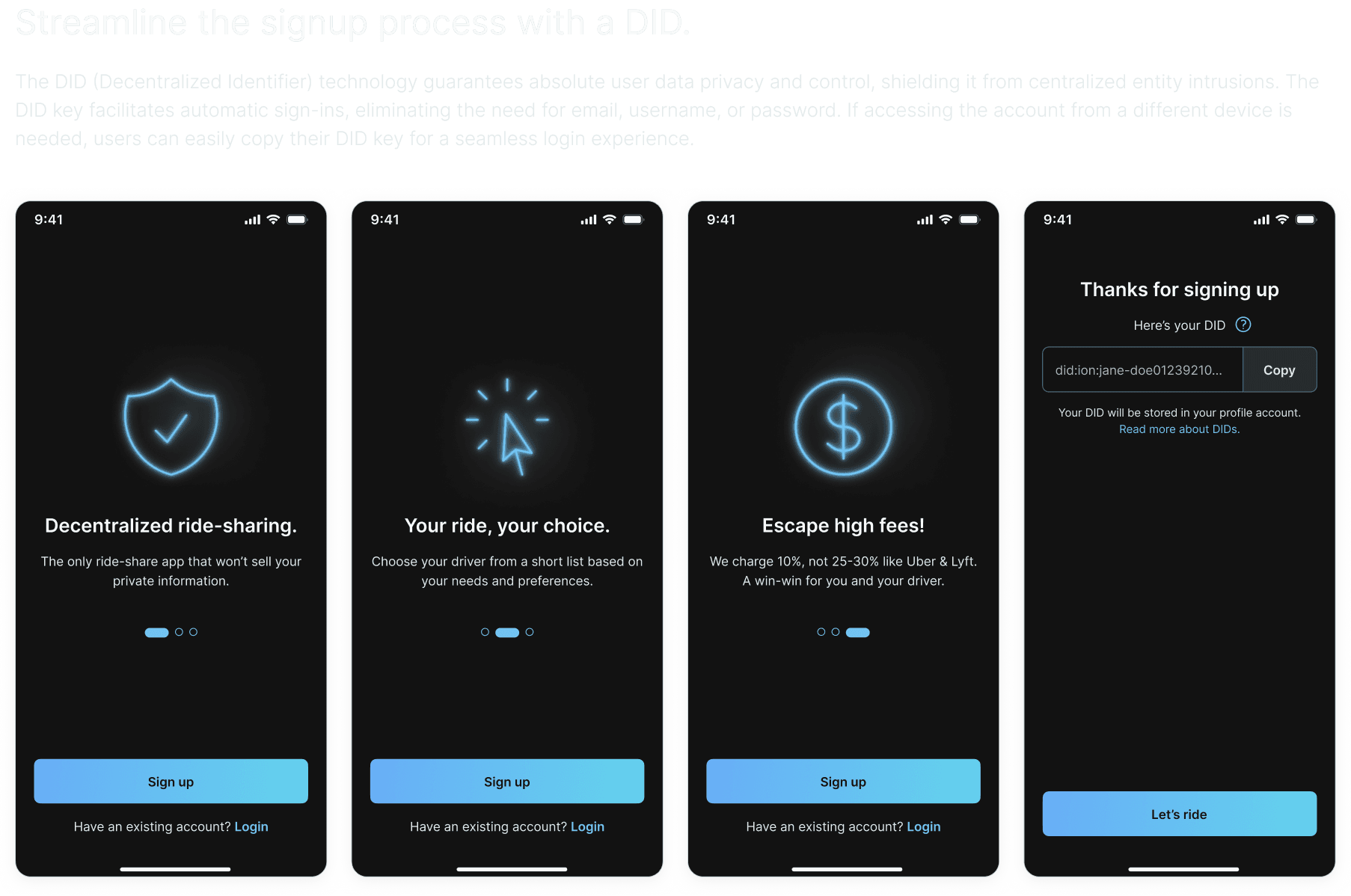

Setting up an account. Because users information is sensitive, this is one barrier to entry preventing more users from engaging with ride-sharing apps.

Selecting a driver. People are apprehensive about meeting strangers, much less getting into a car with them. We want to alleviate this stress as much as possible.

User Flows

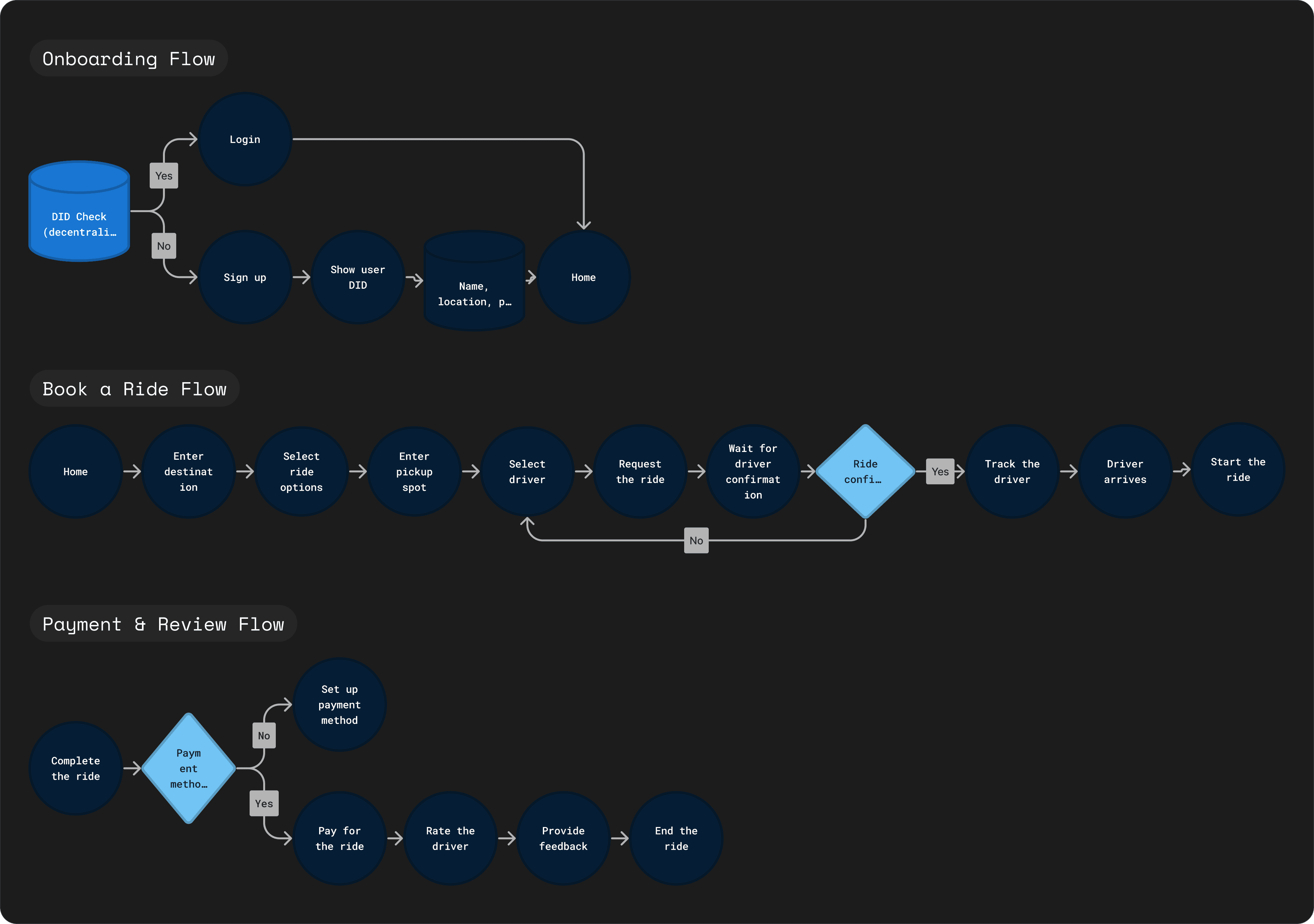

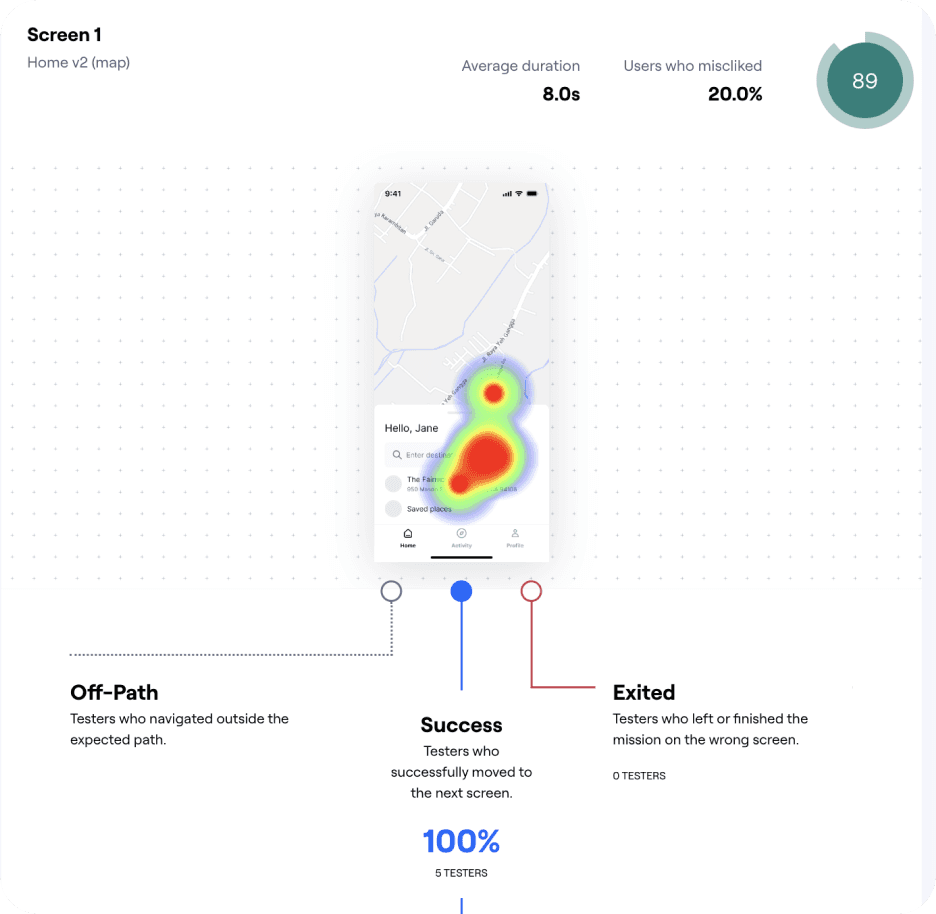

Testing Wireframes

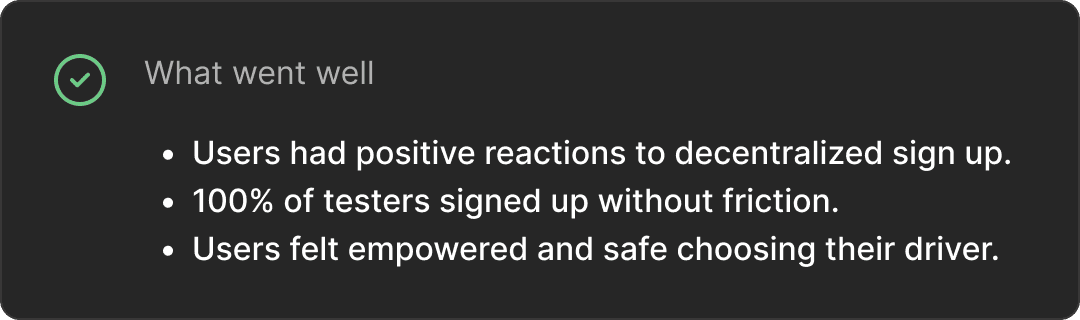

After creating a low-fidelity prototype, we set up a guided usability test using the tool Maze. We focused on two flows:

1. Onboarding: Were people able to understand our app’s onboarding (In the sense, that do they feel more or less secure with their data privacy with our app being decentralized)? Are they able to sign up without friction?

2. Booking a ride: Did our hypothesis of matching drivers to riders make them more secure? If so, how should we optimize it? Where did they have the most friction when booking a ride?

Design Improvements

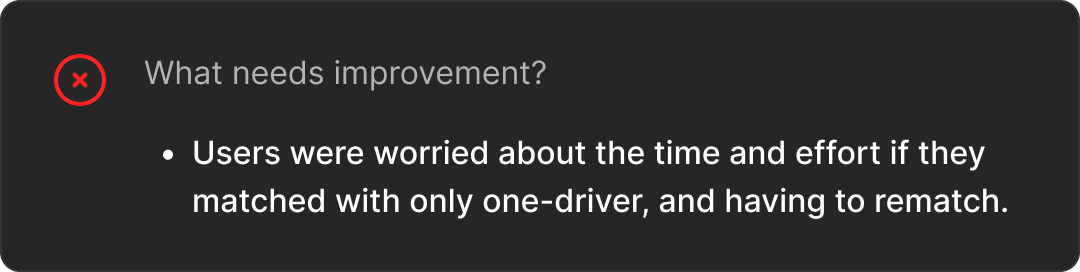

Improvement #1 - Easier login

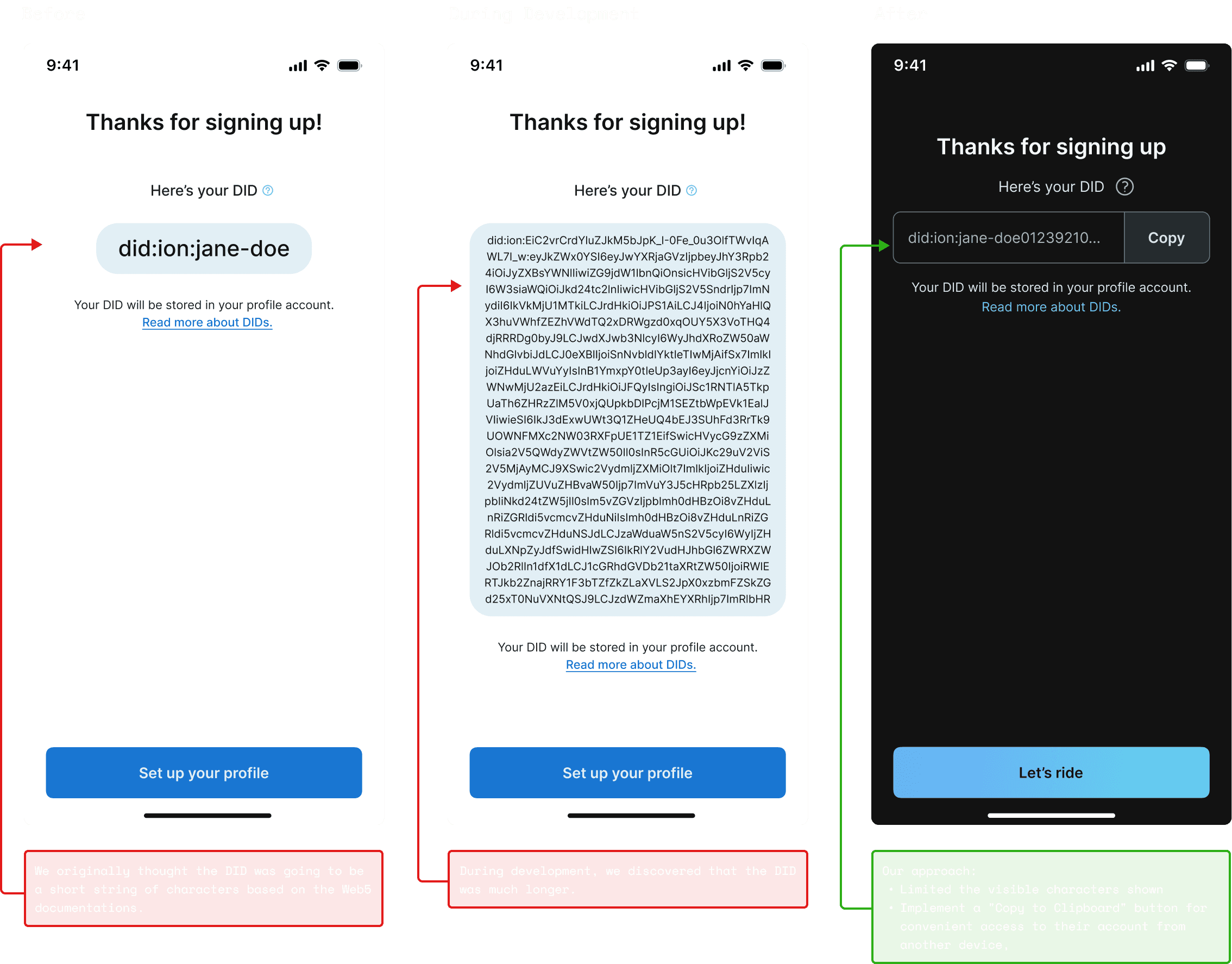

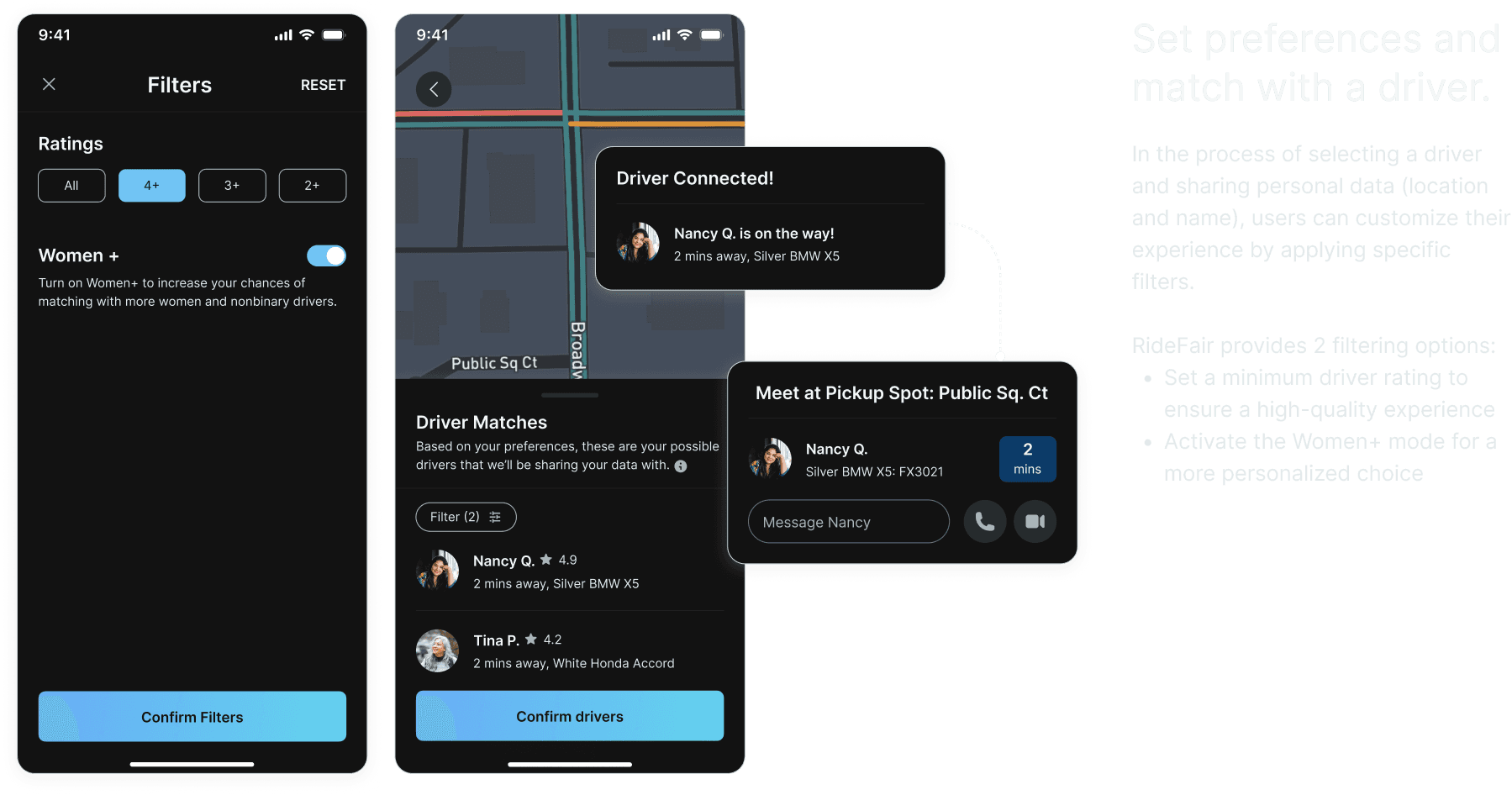

Initially, we restricted users to selecting only one driver, resulting in slower wait times. To empower riders in their choices and mitigate bias, we introduced the option for users to filter based on preferences, including ratings and a specific category for women and non-binary drivers. Because we recognized the potential for bias or profiling against certain drivers in our initial approach, we opted for a direction that not only provides an alternative path to narrow selection but also upholds ethical standards.

Improvement #2 - Driver matching to improve safety, speed, & equity

Our initial approach allowing users to choose their driver resulted in two issues:

1. If that driver isn't available, the rider must rematch with another driver, leading to slower wait times.

To solve for this, we established a driver queue. Riders confirm sharing their ride request with all drivers in the queue. The first driver has 10 seconds to accept; if not, the queue moves to the next, streamlining the process.

2. This method had the potential for bias or profiling against certain drivers.

We knew that allowing for user choice would come at a cost. We also discovered that 91% of rape victims were riders and of these, 81% were women (CNN.com, 2022). So, we decided to prioritize these groups. We created two filters: one that allows users to filter for higher ratings and one for women and LBGTQ+ drivers.